- Eindhoven, Netherlands

- GitHub

- Google Scholar

- Email @University Webpage

Shruthi Gowda

I'm a PhD candidate in Brain-Inspired Adaptive AI at the Data & AI Cluster at TU Eindhoven, Netherlands. My research focuses on bridging workings in brain with neural networks - leveraging insights from cognitive biases, multi-memory systems, and sparse coding, to design and develop effective and efficient lifelong learning models. I have worked across vision and language modalities, using semantic priors and LLMs to improve transfer and out-of-distribution robustness. I am currently exploring multi-modality and world models to couple perception with predictive modeling and long-horizon adaptation.

With 10+ years in industry as AI Research Engineer and Software Engineer, I have built machine learning and embedded software solutions for Autonomous Driving, Quality Inspection, and Healthcare. Combining industry experience with academic curiosity, I’m passionate about developing intelligent systems that are both computationally efficient and grounded in cognitive principles.

Research Interests: Brain-inspired AI, Cognitive Bias ; Generalization - Continual/Lifelong Learning and Robustness in DNNs, Foundation and Multi-Modal networks

More details in my CV

Education

- PhD in Neuro-inspired Adaptive AI, 2023 – Present

Data & AI Cluster, Mathematics & Computer Science Dept

TU Eindhoven, Netherlands - MSc in Computer Science, 2010 – 2012

Universitat Politècnica de Catalunya (UPC), Spain &

Université Catholique de Louvain (UCL), Belgium - BE in Electronics and Communication Engineering

2006 – 2010

Visvesvaraya Technological University (VTU), India

Professional Experience

-

AI Research Engineer, 2019 – 2023

NavInfo Europe B.V., Advanced Research Lab

Researched and implemented a complete scene-understanding suite—

traffic-sign recognition, road-surface inspection, and HD-map change detection—

now powering NavInfo’s HD Maps and autonomous-driving platform. -

Senior Software Engineer → Team Lead, 2013 – 2019

National Instruments, Machine Vision Lab

Drove design and delivery of the Vision Development Module—

NI’s flagship computer-vision library for industrial inspection.

News

- Aug 2025 - 🎤 Invited talk talk on "Brain-inspired Adaptive AI" at the ENFIELD AI Summer School at Budapest University (BME).

- May 2025 - 🎤 Presented at the Adaptive AI Workshop at Politecnico di Milano, aiming to bridge academic research with industrial applications.

- Aug 2024 - 📜 Presented our paper on Cognitive SSL framework at CoLLAs Conference in Pisa.

- Aug 2024 - 📜 Presented our paper DUCA at journal track of CoLLAs Conference in Pisa.

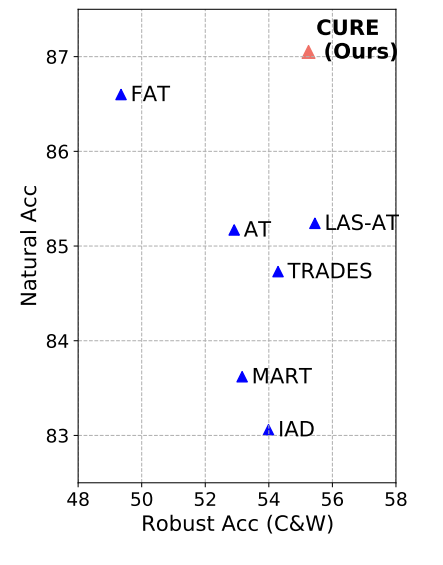

- May 2024 - 📜 Presented our paper CURE for Robustness at ICLR Conference in Vienna.

- March 2024 - 👩💼 Participated in the Women in AI panel disussion organised by CAIRNE, discussing diversity in technology and research careers.

- Oct 2023 - 📜 Paper Dual Cognitive Architecture (DUCA) for Continual Learning accepted at TMLR journal.

- Sep 2022 - 📜 Paper Survey of Real-Time Object Detection Networks accepted at TMLR journal with Survey certification.

- Aug 2022 - 📜 Presented Paper InBiaseD at CoLLAs Conference.

- 2016 - 🏅 Received "Engineering Excellence Award" in recognition of leading innovative idea on Deep Learning based Machine Inspection product line at National Instruments, Texas.

- 2013 - 🏅 Received "Rookie of the Year Award" for outstanding achievement and contribution at National Instruments, Texas.

- 2013 - 🎓 Graduated "cum laude" from Masters in two different universities (UPC and UCL).

- 2010-2012 - 🏅 Received "Erasmus Mundus Fellowship" for pursuing Masters in consortium of European universities.

Selected Publications

Full list at Google Scholar

Conserve-Update-Revise to Cure Generalization and Robustness Trade-off in Adversarial Training

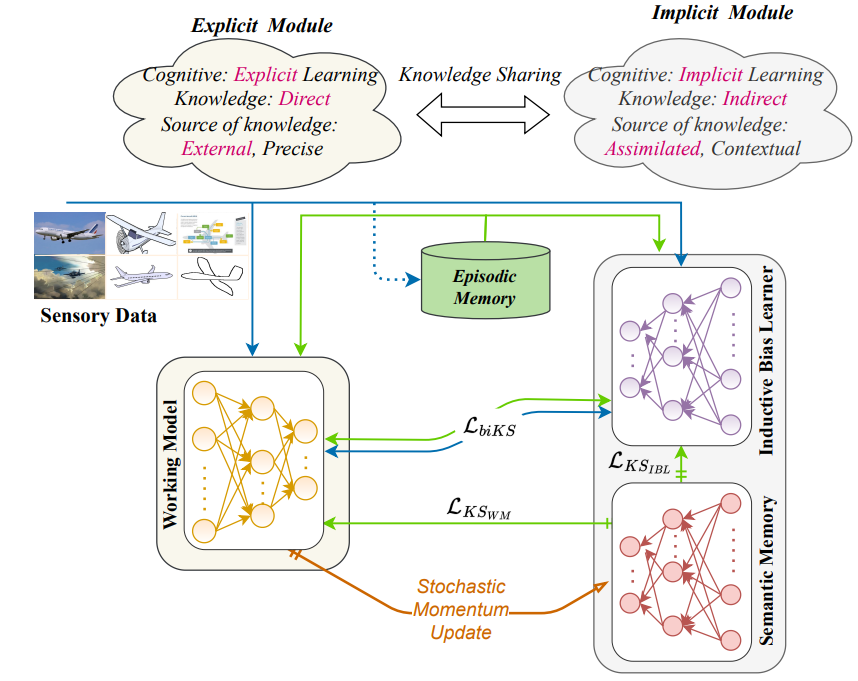

Dual Cognitive Architecture: Incorporating Biases and Multi-Memory Systems for Lifelong Learning

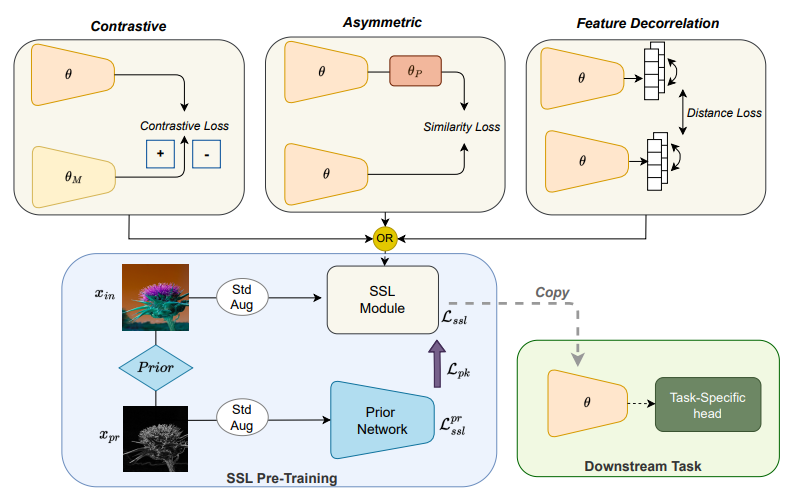

Can We Break Free from Strong Data Augmentations in Self-Supervised Learning?

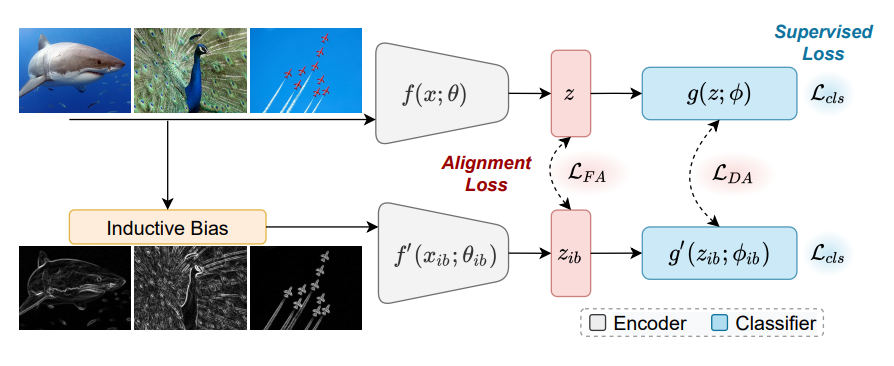

InBiaseD: Inductive Bias Distillation to Improve Generalization and Robustness through Shape-awareness

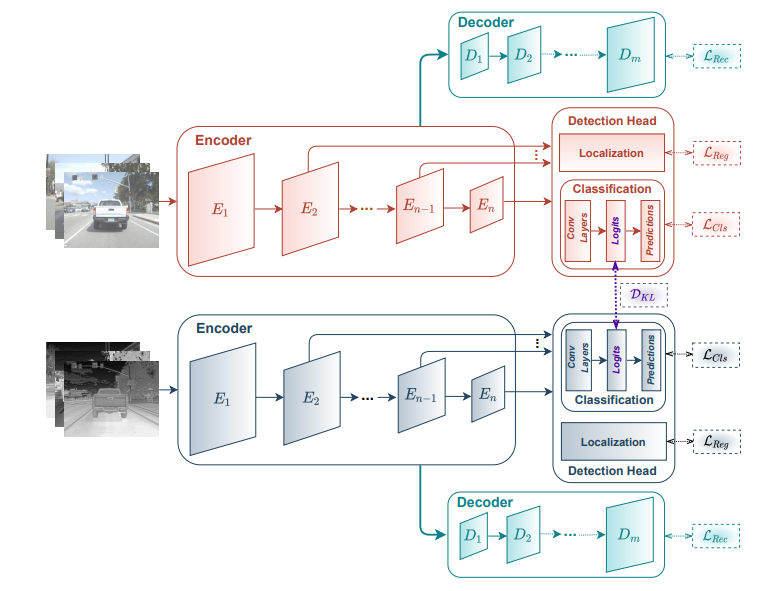

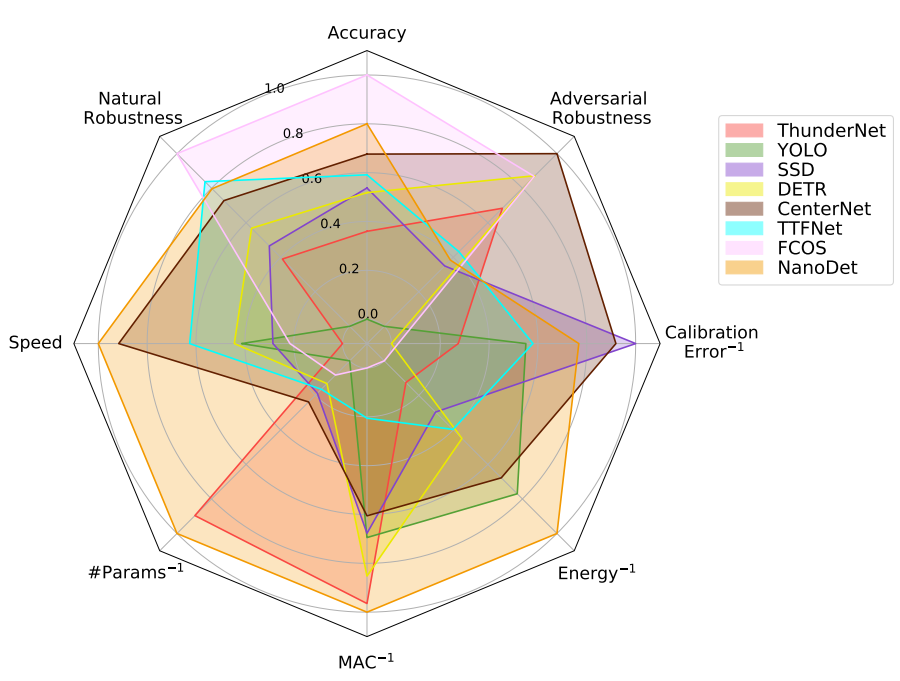

A Comprehensive Study of Real-Time Object Detection Networks Across Multiple Domains: A Survey

Projects

ENFIELD (EU Horizon Project)

Europe-wide Centre of Excellence advancing the pillars of Adaptive, Green, Human-Centric, and Trustworthy AI, with research and implementation focused on lifelong learning, robustness, and fairness. ENFIELD connects top research labs and industry to deliver foundational advances and high-impact use cases across healthcare, energy, manufacturing, and space—coupling efficient (green) AI with reliability and governance so systems can adapt safely in dynamic real-world environments.

SAFEXPLAIN (EU Horizon Project)

Designed safety patterns and build novel XAI components for critical embedded systems in autonomous driving. Our automotive case targets end-to-end detection of road users, traffic scenes; scene segmentation and trajectory prediction of road users (including vulnerable users) with explainability and safety built in, enabling timely collision-avoidance decisions in complex traffic. The work feeds a cross-sector programme (automotive/space/railway) and aligns with functional-safety requirements to accelerate trustworthy deployment

Scene Understanding & Object Recognition Suite for ADS

Designed and engineered a real-time perception framework for multi-camera input fusion to detect and track road users, recognize traffic signs, and parse lanes/road layout for Autonomous Driving Systems (ADS). The suite combines state-of-the-art object detection and semantic segmentation across 2D images/video and 3D point clouds, with synthetic/generative data augmentation to strengthen coverage of rare events. The end-to-end pipeline spans data sourcing and curation, model design/optimization, and embedded deployment.

Anonymizer for GDPR Compliance

Implemented an on-device perception module that detects and irreversibly blurs personal data—faces, full bodies, and license plates—in real time across video streams and stored media, enabling GDPR-compliant collection, processing, and sharing. The system follows privacy-by-design: configurable blur policies per jurisdiction and QA hooks (mask coverage, false-negative audits) for verifiable compliance.

Change Rate Detection for HD Maps

Built algorithms to analyze geo-tagged fleet video and automatically surface infrastructure changes—e.g., new/removed traffic signs, lane geometry shifts, road works—prioritizing regions with high change velocity for HD map updates. The pipeline combines event-based scenario identification (cut-ins/outs, lane changes, lead-vehicle deceleration) with spatial–temporal clustering and confidence scoring, automating HD map maintenance for autonomous vehicle navigation.

NI Machine Vision - Deep Learning Library

Pioneered the integration of deep learning into NI’s inspection stack for defect inspection and an inference pipeline optimized for NI real-time/edge hardware. The solution fuses DL with classical CV (pre/post-processing, ROI proposals, rule checks) to boost accuracy while preserving determinism. To meet strict latency/throughput targets on CPU and embedded targets, optimized kernels and pipelines using Intel IPP, Intel DAAL/oneDAL, OpenVINO, Intel TBB for multithreading, and NVIDIA TensorRT where applicable

NI Machine Vision - Classical Computer Vision Library

Led development of a production-grade machine-vision library delivering reliable inspection on NI embedded targets and Windows. Built core algorithms for industrial use cases—geometric/template pattern matching, robust OCR, high-accuracy 1D/2D barcode reading, edge/contour-based metrology, blob and texture analysis catch fine surface defects (metals, fabrics, PCBs), identify misprints from low-quality images. Delivered as configurable LabVIEW VIs and callable APIs (C/C++/C#).

Patents

- European Patent 22159288.4: AI Based Change Detection System for Executing a Method To Detect Changes In Geo-Tagged Videos To Update HD Maps.

- US Patent 17/894,870: Method and System for Instilling Shape Awareness to Self-Supervised Learning Domain.

- Dutch Patent 020065 NL-PD: Leveraging Shape Information in Few-shot Learning.

- US Patent 019922: Method to Add Inductive Bias into Deep Neural Networks.

- US Patent 17/581,759: Deep Learning Based Multi-Sensor Detection System.

- Dutch Patent 020050: A Cognitive-inspired Multi-Module Architecture for Continual Learning.

Dance Performances

Watch more of my dance performances in various events in Netherlands, on my YouTube channel.

Travel

Mapping the world, one journey at a time.